Deepfakes, Divorce, and Deception with Nicholas Himonidis of The NGH Group

OVERVIEW

In this episode, Devon Rifkin interviews Nick Himonidis, a lawyer and digital forensics expert, and discusses the implications of deep fakes in divorce and custody cases, highlighting how AI technology has made it easier to create convincing fabrications of audio and video evidence. He shares real-world examples of how these deep fakes have been weaponized in legal disputes and emphasizes the need for legal professionals to take digital evidence seriously. Himonidis also calls for new legal frameworks to address the challenges posed by AI-generated deception.

TAKEAWAYS

- Deep fakes are fabrications of audio and video.

- AI has made deep fakes accessible to everyone.

- The technology has evolved rapidly, making it easier to create deep fakes.

- Real-world cases show the devastating impact of deep fakes in legal disputes.

- Legal professionals must take digital evidence seriously.

- Judges need to be aware of the implications of deep fakes.

- There is a need for new legal frameworks to address AI-generated deception.

- Digital evidence must be vetted thoroughly before being accepted in court.

- The ease of creating deep fakes raises ethical concerns.

- AI technology is evolving faster than legal responses.

TRANSCRIPT

Devon Rifkin: Today on the DivorceLawyer.com podcast, we have Nicholas Himonidis, president of the NGH group with more than 30 years of professional experience in private investigations, computer forensics and law. Mr. Himonidis has participated in conducted and supervised thousands of investigations, computer forensic engagements, e-discovery projects and cryptocurrency blockchain forensic matters.

He has litigated cases in both the federal courts and state courts, and he has conducted and managed the investigation and e-discovery phases of hundreds of cases. His investigative experience spans financial fraud, including civil RICO claims, insurance fraud, fraudulent claims against the U.S. government, and embezzlement, digital forensics in both cybercrime and traditional crimes, and complex high-profile matrimony and custody.

Mr. Himonidis has written and lectured extensively on e-discovery, digital evidence, cryptocurrency and blockchain forensics, financial investigation and legal ethical issues related to expert witnesses. He has been qualified as an expert witness in digital forensics, the authentication of digital evidence and cryptocurrency forensic in state and federal courts and he has provided countless expert reports on these issues. In addition, Mr. Himonidis has been appointed by the Supreme Court of New York State as a neutral digital forensic examiner and neutral cryptocurrency forensic expert on numerous occasions. He was appointed in the New York State Bar Association Task Force on emerging digital finance and cryptocurrency in 2022 and is the co-chair of the Nassau County Bar Association Cyber Law Committee.

He is also a licensed attorney, a licensed private investigator, a certified fraud examiner, a certified computer forensic specialist, and certified cryptocurrency forensic investigator, and holds the Chain Analysis Reactor Certification, CRC, credential related to cryptocurrency forensic investigations. Mr. Himonidis earned a bachelor of science degree in criminal justice from St. John’s university, where he graduated cum laude. He graduated also from St. John’s university of law with the Juris Doctor degree, magna cum laude, finishing in the top 2% of his class.

Welcome to the podcast, Nick.

Nicholas Himonidis: Wow, I knew all that stuff was on my resume, but I don’t remember the last time I heard it read aloud. Thanks for that lengthy introduction and trip down memory lane.

Devon: Wow. Quite a bit. Yeah, well, well deserved. is quite a bit. That is very impressive.

Nicholas: Been a little busy over the years.

Devon: I’d say, I’d say. So the topic today is deep fakes, divorce and deception. And we’re going to focus on how to explore AI generated deep fakes, audio, video, text, and how they’re being used to fabricate evidence and divorce proceedings from fake messages and emails to altered recordings. Very timely topic. So let’s just get right into it and let’s ask, you know, can you briefly explain what deep fakes are for our audience and how they’re created using AI.

Nicholas: Sure. Let’s take a half a step back and just say, when people think about AI today, for the most part, they think about ChatGPT and other large language models as the official terminology for what ChatGPT is, right? The type of AI where you ask it questions, you feed it information, you ask it for some output.

That’s what most people are familiar with, but AI is so much broader than that. And in this context, we’re talking about the use of AI to produce things that are not real, which 10 years ago, you could produce a deep fake, which is a basically a forgery of a digital audio recording or digital video recording, right? The classic deep fake is, and I wish I could show one, but it’s a little difficult in this context, right? We’re doing a podcast online. A classic deep fake that I show when we do CLE programs on this topic is, for example, Sean Connery, okay?

So although he died a number of years ago, there’s a video of him speaking to the audience, introducing myself and my co-presenter with today’s saying, know, good evening to the XYZ Bar Association, et cetera, et cetera. And you’re watching this video and you’re seeing Sir Sean Connery, who everyone is familiar with, everyone knows his voice, everyone knows what he looks like. And the audience is looking at this video of him speaking words they know to a certainty he never spoke. But it is unbelievably real and convincing because it is actually him in the video.

And here’s the thing. It is actually his voice. It’s just been pulled apart.

Nicholas: With the use of AI and digitally reassembled to say new words in a new order, which he never previously uttered. Now, the idea of doing that, creating that deep fake, right? And obviously that one is done for training and entertainment purposes and lots of these are done for entertainment purposes.

You can Google deep fakes of Tom Cruise and this one and that one saying crazy outlandish things on the internet, et cetera. But here we’re talking about strategically weaponizing the technology to do this in the context of a divorce or custody or other litigation. Doing a deep fake or the ability to do a deep fake isn’t technically new, 10 years ago you could do one. But 10 years ago, okay, and this is the key, 10 years ago it would have taken a half a dozen digital audio video engineers sitting in a really professional studio, I don’t know, a week to do a deep fake of the quality that I just referred to that we use as a demonstration when we do CLE programs for attorneys on this topic, which using free or very inexpensive AI tools, one of my junior techs on my staff did in about two or three hours. Okay, that’s the AI component, right?

Devon: Wow.

Nicholas: And I don’t want to get too far afield, but AI has done that in thousands of different other contexts, right? Taken something that was doable or theoretically doable before, but only at the cost of tremendous man hours and resources and money and high tech equipment, et cetera, et cetera, and made it so that any Tom, Dick or Harry who’s willing to spend two or three hours playing around with the AI software and learning how to do it can just do it free or cheap. And it’s led to some really, really scary situations in real world situations in these litigations.

Devon: So Urban Legend has it that you were, as usual, way ahead of your time and at a conference a number of years back you had given a Sean Connery example and I think maybe a Terminator, was it a Terminator? And so, I mean, even that trickled down to us just based upon your reputation of being way ahead of the curve. So my question to you is, when you did that and blew everybody away a number of years back versus where we are today with how quickly things are advancing, what inning would you say we’re in today when it comes to the evolution of tools and how easy this will continue to be?

Nicholas: Maybe at the top of the third.

Devon: Top of the third. Okay.

Nicholas: Yep. And it’s scary. I mean, we have come so far so fast. The first time we did a deep fake example like for a CLE program, it probably took us a week to do it. And it was really good, but I wouldn’t call it fantastic. That was maybe less than five years ago.

A couple of months ago, we did one for a program here in Nassau County, Long Island for the Nassau County Bar Association. And one of my techs knocked out a new one in two hours that, you know, would fool anybody.

Devon: Five years later. So the next question is, how deep fakes are appearing in everyday use and text messages, emails, and audio recordings, how are these deep fakes showing up?

Nicholas: All right, so when we talk about deep fakes for starters, right, we’re primarily talking about videos and audios that are not real at all. In other words, they’re fabricated. They were created using the person’s likeness and voice, but they’re completely fabricated, right? That’s why we call it a deep fake. How they’re showing up, I’ll give you a perfect example.

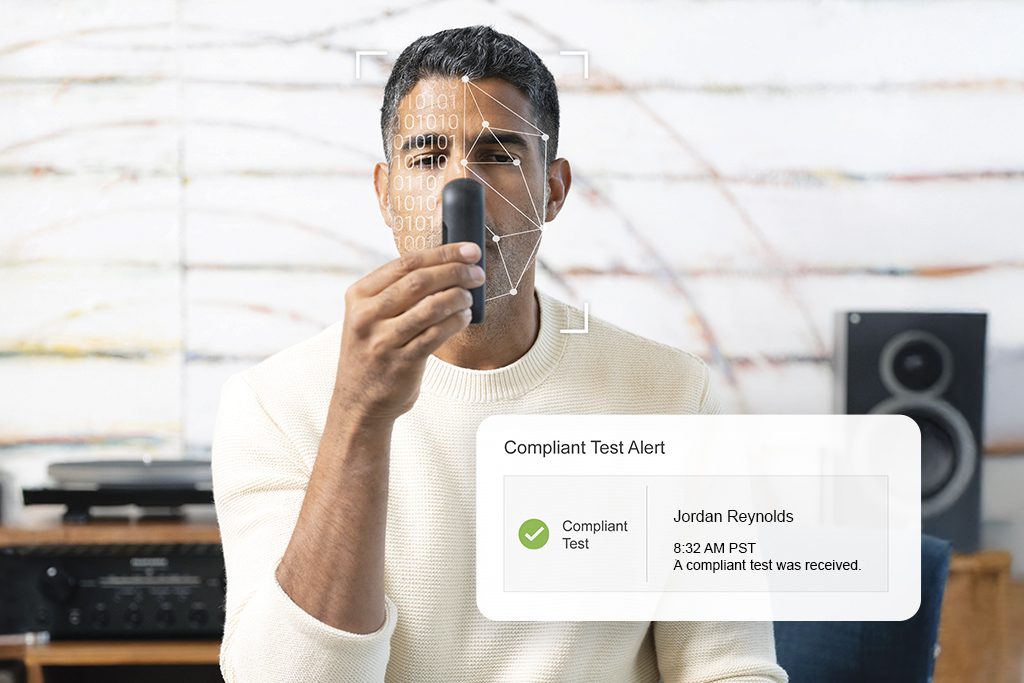

Real case – just within the last year or two. Custody dispute down in Georgia and the mother of the children submits to the neutral forensic evaluator that’s appointed by the court some audio recordings along with other evidence was submitted. These audio recordings are in the Father’s voice. I gotta stress that. It’s not somebody mimicking the Father’s voice. It doesn’t just sound like the Father’s voice. It is the Father’s voice.

Devon: It is his voice.

Nicholas: The recordings are of his voice. I’m being very careful how I word this. I don’t say him saying, his voice saying absolutely horrendous, off the chart horrendous things about the wife slash mother of the children, regarding one of the children, and even just about general categories of people in society who have nothing to do with the case. Saying the kind of things that would make a judge take this guy’s kids away, make him only have supervised visitation, probably make him lose his job if it ever got out, and make him a pariah in society. That kind of stuff.

The father hears these recordings because they get back to his attorney and he listens to them and his reaction is, my God, yeah, that’s my voice, but I never ever in my life said any of those things.

Now, any attorney, and I’m not speaking specifically about the attorney in this case, but any attorney having their hearing this, hearing these recordings that have their client look at them say, no, I didn’t say that. The attorney is raising their eyebrows, scratching their head and going, but I just heard you say it on these recordings. And you’re, you know, and I’ve been working with you for two years and I know what your voice sounds like. And I’m not an audio expert, but that’s your voice. And you’re even admitting that’s your voice. Here’s the thing. It turns out in that case, yhe mother of the children took a whole bunch of voicemails and other recordings she had of the father and put them into, in that case, an AI audio remixing platform called 11 Labs, which is not free, but is very cheap. She uploaded all these voice exemplars, if you will, the raw material into 11 Labs. And once she’s done that, and the AI has analyzed and indexed and gotten it all ready,

There’s no computer or audio engineering skill required of the user. You just upload this raw material and then literally type what you want the AI to say in the voice of the person whose voice you just gave it to use. Okay, so she created these deep fake audio recordings, recorded them from the computer, where she created them onto her iPhone and then submitted them as if they were real.

Devon: And you’re saying she wasn’t in Silicon Valley. She didn’t have a tech team. This is at the most retail of levels. This is some woman in her home who did.

Nicholas: The tool she used to do this was a $15 a month subscription, $15 or $19 a month subscription to the 11 Labs platform and a bunch of audio recordings that she already had collected over a few years, like actual ones, voicemails, some recordings she had made on her iPhone of the father’s voice.

It fooled the forensic evaluator, had the court fooled, had everybody fooled until the attorneys, you know, at the behest of the father who swore this couldn’t be real, hired us to examine those recordings. And just so you know, it’s not as easy as it sounds to just examine a recording and know that it’s fake.

If we had been given the original recording created by the 11 Labs tool from the woman’s laptop computer, for example, we would know very quickly looking at it, what it is and what it’s not. We would know that it’s a deep fake, forensically looking at it. But that’s not what we got. What we got was an iPhone recording because I hate to give people with malicious, terrible motives credit for anything. But I’ll say she was smart enough to play the recording from her computer where she created it onto her iPhone. So that at least on the surface to someone who wasn’t really, really deeply looking at it in a forensic way, it was just gonna appear as a regular iPhone recording, which is what it was.

We got to the bottom of it. We figured out what we were looking at. We worked with the attorney. The attorney brought the heat, went to court, got a court order to examine the mother’s computer devices, et cetera, so that we could get the rest of the evidence of what we need. And before any of that happened, this is just so we’re clear, none of this is speculation on my part about what happened here, because ultimately it was admitted by the mother. She admitted exactly precisely what she did, which tracks exactly precisely with what I just told you. We had concluded from our forensic investigation.

Devon: So what kind of fake materials are most common? Texts, emails, voice notes, video, is there a trend?

Nicholas: Sure. Videos, as cool as they are in example form and in a teaching context, we have seen them in the wild, in real cases. They’re not as common because they’re tougher to make them, I’m gonna say, 100 % convincing. Audios, such as the ones I just described.

It’s huge seeing them all the time because they’re so easy to make. They’re so effective and they are so incredibly difficult to anyone other than a forensic professional to detect as not real. So they’re huge. you know, and if they go unchallenged or, you know, undiscovered for what they are, it can be devastating. As I said, you know, in the case we were just talking about.

But we also see it with text messages. That’s very big now. Just fabricated text messages. There are any number of tools. And by the way, I don’t mention most tools by name. I mentioned 11 Labs just because it was part of a specific case we were talking about. But I don’t, and people ask me all the time in like CLE programs, I say no. I’m not gonna sit here and rattle off a list of naughty tools, but there are a lot of them out there. Many of them are free. Most of them, if they’re not free, they’re real cheap. You can go and create fake text message threads, like a whole thread that looks like a text message thread between you and the other party, and just create it in three minutes and click a button and export it as a PDF and give it to your lawyer and say, yeah, here.

Devon: Gotcha. So with all these technological changes and tools and whatnot, maybe the larger, more interesting question is, how is the legal system going about handling AI-generated altered digital evidence?

Nicholas: That is the million dollar question. So from the point of view of attorneys dealing with this, right, which is, I call it the first line of defense.

What I’ve been talking to family lawyers about and telling them now for quite some time is, you know, there was a time when your client looked at you and said, in the face of having just listened to an audio or being shown a photo of them doing something and your client said, I didn’t do that. That’s not real. There was a time where I’m not saying it was okay to just dismiss what they were saying, but you know, how serious you took their denial may be varied, you know, on a scale. We’re past that point now.

You’re confronted with a video, an audio, a digital photo, a text message thread, or any other piece of digital evidence, and your client looks you in the eye and says, that’s not real, I didn’t do that. You, in my opinion, you have a very serious obligation now, given where we are technologically, you have to take that extremely seriously, and you have to go through all the steps necessary to vet that out and give the client the benefit of the doubt that they may actually be telling the truth that what you just saw them doing on video, what you just heard them saying on audio, which seems incredibly real and legit, may not be. And you have to give them the benefit of that doubt. That’s, I think, one of the key takeaways for family lawyers anyway, as a first line of defense.

On the flip side of that, lawyers have to be really, really on guard for the client who presents them with affirmative evidence. The client who comes in and says, aha, I have this audio recording of the other party, my estranged spouse saying all this stuff. You can’t just take that at face or your value as the case may be. Whether it’s a video and audio, like we said, a text message thread that the client’s giving you a PDF copy of, horrendous as it may be, as tremendously relevant as it might be, or even, know, dispositive and helpful in your case as it might be. If it’s real, you have to to take those extra steps to at least do some diligence to ensure that whatever your client’s proffering to you is actually legit. Because it’s just too easy to fake this stuff now.

And that’s really sad, but that’s a whole other philosophical discussion about how did we get here? Here is where we are, right? So there’s that. And then I had a very unique, I don’t know about unique, very interesting opportunity at a recent CLE program I did on this topic. And there were three or four judges in the audience, judges who deal with litigation between parties, family law and otherwise. And we had a really interesting conversation, because in the end, these judges, several of whom I know and are very well respected for their academic and legal knowledge and their practicality, the way they handle cases, they basically said in the end what we’re talking about right here doesn’t really change or require any change with the rules of evidence or the way the court handles these things per se.

The courts have rules in place to deal with the authentication of evidence. You know, whether or not it’s admissible, if it is admissible, how you challenge, you know, the weight of that evidence, et cetera, et And in the end, it’s not so much that the court, I mean, the courts need to be aware of this, obviously, but it’s not so much that the courts need to change the mechanics of how they do things. Lawyers need to learn to be on the lookout for these issues, spot them, early on in the case, not the night before trial, you know, where the day trial starts. And vet these things out during the course of a case through discovery and through having forensics done if necessary, while there’s time to do that. And that’s kind of how, you know, that conversation, you know, ended up and I tend to agree with that.

Devon: So we’ve spoken about specifics and I think what’s interesting is sort of the evolution of where we were to how we got here. And so my last question is, do you have an opinion on how new legal frameworks might specifically target AI generated deception going forward?

Nicholas: Yeah, I do. And before I answer that, I already made one, like, aside comment, you know, that, you know, how we got here is a whole other discussion. And I’m not so comfortable about how we got here and where we are, but it is where we are.

So I’ll start by saying that we’ve been talking about this phenomenon, this situation that we’re in, specifically in the context of like family law litigation, divorce cases, custody cases, and it is a really big issue there. But that’s not by a long shot the only place this is a really serious problem.

You could Google and find news articles just from the last year of really serious situations where this type of technology and deep fakes have been used in even more I want to say serious and frightening ways. Just for example, a woman in, I believe it was the Midwest, just a few months ago, gets a phone call on a Saturday afternoon. Her teenage son is supposed to have gone out with his friends to a high school football game. And she gets a phone call and the caller tells her that he’s a drug dealer and her son stumbled into their drug deal and then says, you know, hang on, I’m gonna put him on the phone. And of course he then plays, she doesn’t realize it’s being played, but he plays an AI generated quote recording, right? No, it actually is a recording, right? That he’s playing, she just doesn’t know it’s a recording of her son’s voice saying, oh, help me, you know, they have me, they’re gonna kill me, blah, blah, you gotta do what they say, et cetera, et And the guy is basically extorting her and saying, you need to do this, that, or the other thing, send us this money, you know, come bring us whatever, or we’re gonna kill your kid. And fortunately, that particular story has a quote, happy ending, she was able to reach her other son who was able to get over to where the other, you know, the brother was and say, I don’t know what you’re talking about. I’m looking at him right now. He’s with these five other people. He’s sitting in the bleachers at the football game right now.

Okay. But those kind of situations are rearing their heads like every week. and that’s really, really scary when you can play a completely fabricated or do you of some teenager’s voice to his mother and his mother swears that’s her son because it is actually her son’s voice, right? He’s just never said those words before.

We’re in a really scary place.

Devon: Mmm. Yup. It sounds like it. mean, that’s serious stuff.

Nicholas: And when it’s that easy to do.

Devon: Serious stuff.

Nicholas: Yeah, it’s being weaponized. so my answer, circling back to your initial question, is in terms of legal frameworks, do I think we need them? Boy, do I.

And I think there, and I don’t wanna go too far off topic here, but there’s been a push for legal regulation with respect to the generative AIs, like the ChatGPTs and the large language models that people are more familiar with on a day-to-day basis that people interact with on a quote, legitimate basis every day. Someone needs to pick up on what we’re talking about, this aspect of AI and get some serious guardrails in place. Perhaps, you know, whatever, enhanced, you know, criminal laws, you know, for using this type of technology, you know, to do some of these illicit things or whatever the case may be, you know, leave that to the folks who come up with those things.

But there’s no question in my mind that there is very, very little right now, and there needs to be a lot more really fast, because this stuff is exploding onto the scene, so to speak, and has been now for some time, and it’s not slowing down.

Devon: I mean, we’ve been on a short amount of time here and probably just scratched the surface. And it’s scary, it’s frightening, insert word, and it’s something that people really need to know about. So I’m really glad that we had you on to discuss this. My last question is, you had mentioned that you work with family lawyers, who hires you and how to best connect with you?

Nicholas: So usually our client hires us through their counsel, you know, for privilege reasons, et cetera. But it’s normally the lawyer that reaches out to us in these situations. And they can call us anytime. They can easily look us up. It’s the nghgroup.com, T-H-E-N-G-H-G-R-O-U-P.com. And we get calls about this, specifically about this type of stuff, probably two or three or more a month now.

Devon: Okay, terrific. Well, thanks so much, Nick. Thanks for being on. Appreciate it. Great seeing you.

Nicholas: You’re welcome. It’s been my pleasure. Anytime, anytime. Thanks. Likewise.

The DivorceLawyer.com Podcast – Deepfakes, Divorce, and Deception, Featuring Nicholas Himonidis, President and CEO – The NGH Group

Contact Information – Find Nicholas Himonidis and The NGH Group online here, TheNGHGroup.com.